🔸 TL;DR

HotSpot made Java fast by using runtime profiling + JIT compilation to optimize the code you actually execute—often removing the cost of high-level abstractions—so you don’t have to write C++-style code to get great performance. 🚀

🔸 WHAT IS HOTSPOT JVM?

HotSpot is the JVM runtime (introduced in 1999) that made Java performance “serious”: not by pretending Java is C++, but by optimizing your actual running code at runtime.

It’s the engine behind the idea that Java can feel high-level… and still run very close to native speed. 🚀

🔸 THE “ZERO-OVERHEAD ABSTRACTION” FEELING (IN PRACTICE)

Java gives you abstraction: interfaces, virtual calls, lambdas, streams…

HotSpot tries to make those abstractions “disappear” when possible:

▪️ Inline small methods (even through interfaces)

▪️ Remove bounds checks when proven safe

▪️ Escape analysis to keep objects on the stack (or eliminate them)

▪️ Lock elision when contention isn’t real

Result: you write clean code… the runtime fights the overhead for you. 🧠✨

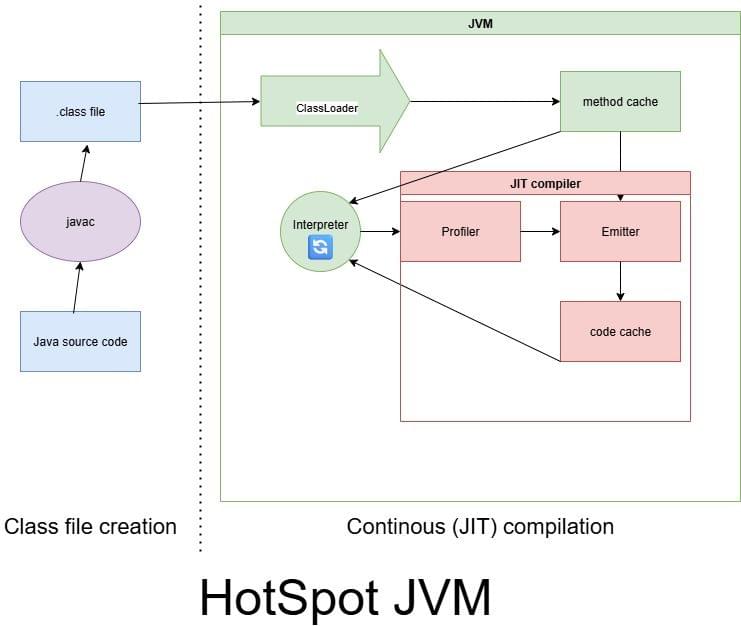

🔸 JIT COMPILATION: THE SECRET SAUCE

HotSpot starts by interpreting bytecode, then uses a JIT (Just-In-Time) compiler to turn hot code paths into optimized machine code.

Not “compile once, hope for the best”… but optimize based on reality: what’s executed most, what branches are taken, what types you actually see at runtime.

🔸 WHY JIT IS A BIG DEAL

Advantages that are easy to underestimate:

▪️ You don’t have to write low-level tricks everywhere (manual inlining, branch hacks, etc.)

▪️ The JVM can optimize differently per environment (CPU, workload, traffic pattern)

▪️ It can adapt over time as your app warms up and behavior changes

In short: less low-level complexity for developers, more consistent performance tuning by the runtime. ✅

🔸 PGO: PROFILE-GUIDED OPTIMIZATION (THE JVM DOES IT LIVE)

In native builds, PGO often means: run → collect profiles → rebuild with profiles.

HotSpot does a runtime-flavored version:

▪️ It collects profiles while your app runs (hot methods, types, branch probabilities)

▪️ Then re-optimizes the most valuable code paths

That’s why “warmup” exists—and why steady-state performance can be impressive. 🔥

🔸 ONE MORE RELEVANT THING PEOPLE FORGET

HotSpot can de-optimize.

If it made an assumption (ex: “this call always sees type X”), and later reality changes, it can rollback and recompile.

That flexibility is a huge part of why it can be aggressive and safe. 🛟

🔸 TAKEAWAYS

▪️ HotSpot (1999) is a major reason Java can be both productive and fast

▪️ JIT = performance based on real runtime behavior, not guesses

▪️ PGO-like profiling happens continuously during execution

▪️ Abstractions aren’t “free”… but HotSpot often makes them feel close to free

▪️ Warmup matters: measure performance correctly (cold vs steady-state)

#Java #JVM #HotSpot #Performance #JIT

🍃📗 Grab your Spring cert Book: https://bit.ly/springtify