As part of my journey as a VMware vExpert 2025 🌟, I’ve started exploring labs that combine Artificial Intelligence (AI), Machine Learning (ML), and the Tanzu Platform.

This first lab gave me a hands-on view of how to host, serve, and manage AI/ML models on private infrastructure — while also introducing the essential concepts behind MLOps, the DevOps-flavored discipline tailored to machine learning.

Let me take you through the highlights 👇

🔹 Part 1: AI, ML, and MLOps – A Global View

Before diving into VMware Tanzu, the lab walks through the foundations of AI and ML:

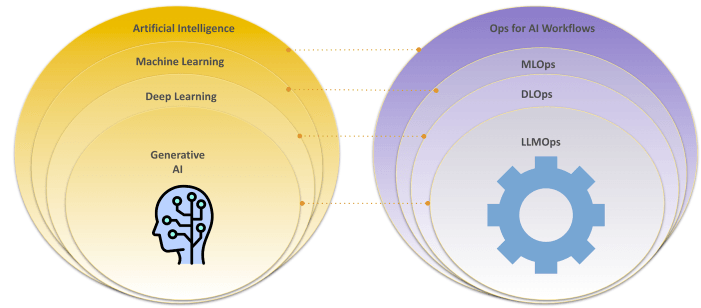

- 🤖 Artificial Intelligence (AI): Systems simulating human intelligence.

- 📊 Machine Learning (ML): Algorithms learning from data without explicit programming.

- 🧠 Deep Learning (DL): Neural networks mimicking the human brain.

- ✨ Generative AI: Producing text, images, and more from learned patterns.

What about MLOps?

- ⚙️ MLOps: Applying DevOps principles to ML — automating deployment, monitoring, and retraining of models.

- 🧬 DLOps: Focusing specifically on deep learning models.

- 📚 LLMOps: Targeted at Large Language Models and Generative AI.

The Key Personas

In any ML project, multiple roles collaborate:

- 👷 ML Platform Engineer – Builds and manages the environment.

- 🛠️ MLOps Engineer – Operationalizes models into production pipelines.

- 🔬 Data Scientist – Designs, trains, and experiments with models.

And of course, depending on the company size, roles may overlap or diversify (Data Engineer, ML Engineer, AI Engineer…).

👉 Takeaway: MLOps is iterative. Models must adapt to data drift and concept drift, making monitoring and retraining essential.

🔹 Part 2: VMware Tanzu in Action

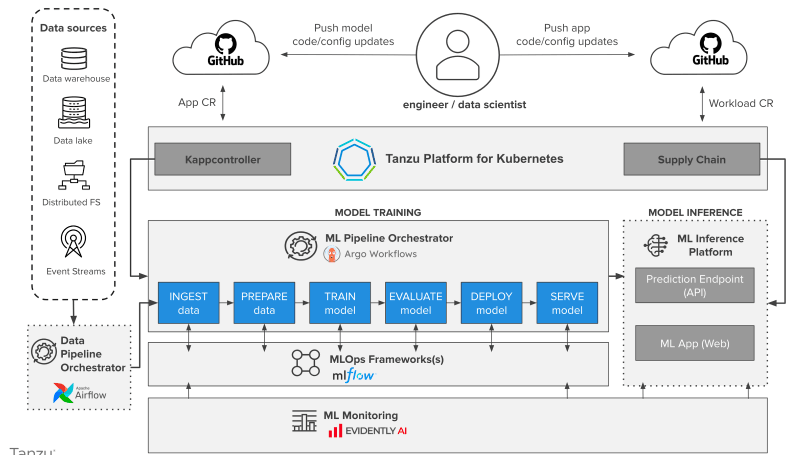

After the theory, the lab shifts into practice with VMware Tanzu 🌀.

The use case: 📸 Build an object detection platform for images (CIFAR-10 dataset with 60,000 labeled images, 10 classes).

The Required Stack

To achieve this, the lab sets up a full MLOps pipeline on Tanzu, including:

- 📓 Experimentation environment (Jupyter notebooks & alternatives).

- 🔄 Pipelines & orchestration with Argo Workflows.

- 📦 Model registry & versioning with MLflow.

- 📚 Data catalog with Datahub.

- 👀 ML Observability with Evidently.

- 🚀 CI/CD integration (GitOps ready) for automated workflows.

All of this is built on Tanzu’s cloud-agnostic foundation, meaning you can run ML workloads across any cloud or on-prem infrastructure — without becoming a Kubernetes guru.

Why Tanzu?

Because Tanzu provides: ✅ A unified way to deploy ML workloads. ✅ Flexibility to mix open-source tools. ✅ Scalability and governance with enterprise readiness.

👉 Takeaway: Tanzu makes it possible to manage end-to-end ML lifecycles — from experimentation to production, observability, and retraining.

🎯 Conclusion

This first lab was a perfect way to:

- Get familiar with AI/ML fundamentals 🔍

- Understand the roles & lifecycle of MLOps 🔄

- See how VMware Tanzu enables real ML projects ⚡

As a vExpert 2025, I’ll keep sharing my journey with Tanzu and AI/ML here on LinkedIn and on my dedicated blog 📝. Stay tuned for the next modules where we’ll go even deeper into hands-on MLOps with Tanzu.